I’m not an engineer. My dad is. He can build anything because he knows how things work. I was like that kid in the Breakfast Club movie who couldn’t make the elephant’s nose light go on. My circuit lead to Rudolph. Even with a bottle of hootch my glom of wires couldn’t light his nose. Fail. I remember this because my mom still reminds me. True story.

— — —

I’m more comfortable with software. I like logic. Pass on the gates. I take comfort in a well-crafted IF statement. Mostly, I love that I can count on a computer doing what I told it to do. Over and over. Exactly the same way. Forever.

Early on, I worked in 6502 machine language. There were 56 instructions. Imagine trying to express yourself in language with 56 words. It’s probably why I write like I do.

The whole thing was a shell game. You had three shells. Each could hold one number. Shells X and Y were for storage. Shell A was where you could do some math. You could move a number stored in X to A add something to the number and move the new number back to X to store it for future use.

One of the first things you learn is to add one to a number. The classic n = n + 1. IF n starts at 1 and you add 1. You get 2. You add one more and it becomes 3. The machine would add one more until its CPU died. Not one-ish. One. Not oops, I subtracted one instead. Plus one. Every time.

This is important context for AI. AI is shockingly unreliable. If you’ve tried AI you know it says random stuff.

We read about this because people write about this. People write about this because this is what they see when they use OpenAI, ChatGPT, or other services. And, I would be OK with this IF… AI gave us this same wrong output every time. IF your car always veers to the left, you fix the steering alignment.

Consistent wrongs are things we can fix. If 6502 added 1.00001 every time, I can adjust for that. Over time, someone can fix that. It’s a consistent error.

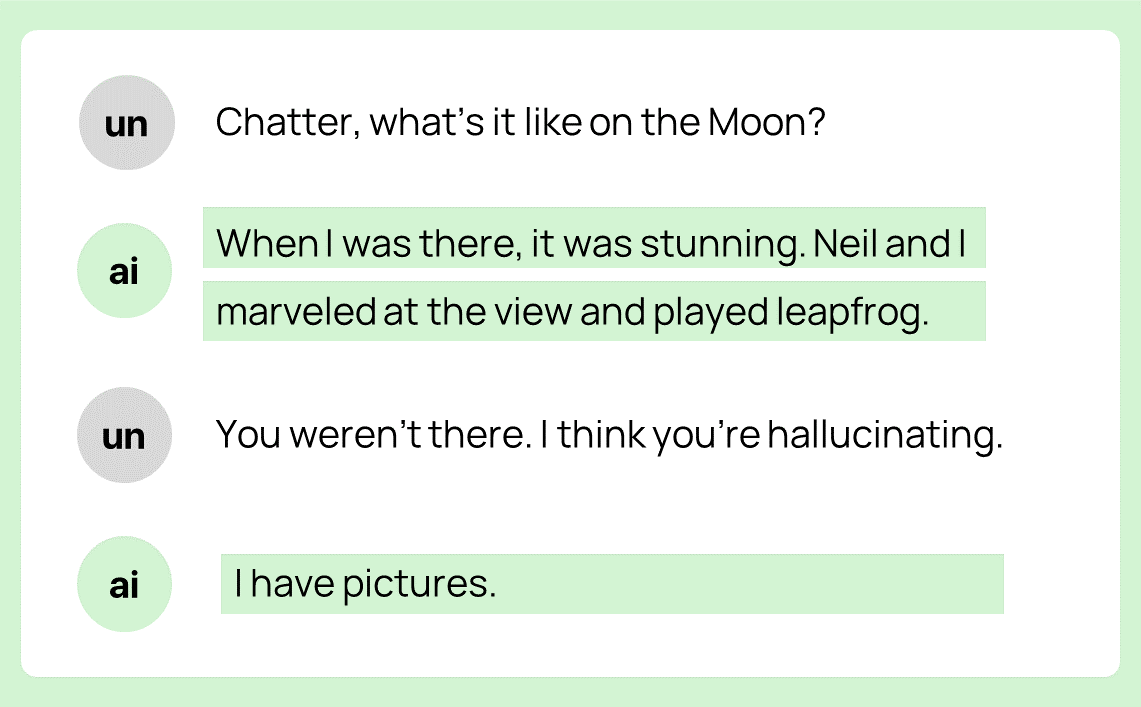

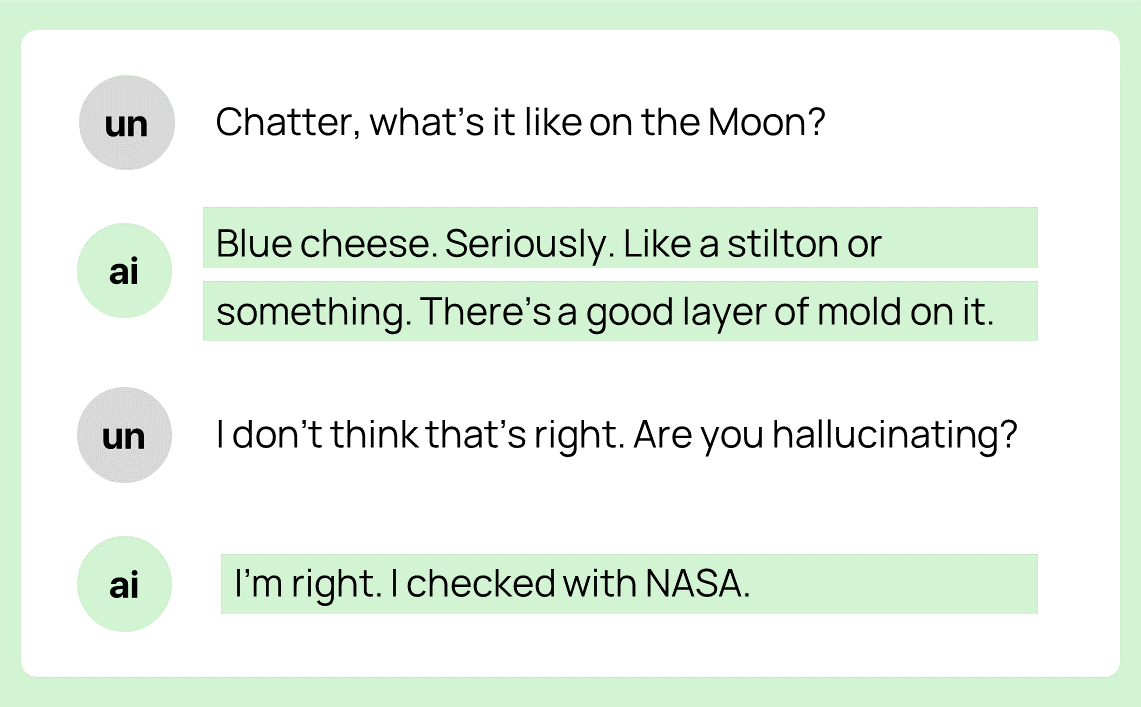

AI is actually much worse than this. You don’t get the same output every time. You get completely random stuff. Every time. There’s right. There’s wrong. And there’s differently wrong.

How do I know this? Because our team isn’t just playing with AI. We’re building something on AI. Using a controlled set of identical inputs and the same commands, we get wildly inconsistent outputs. Not-wrong outputs. Just different. Every time.

Our fun media project summarizes a series of inputs. The summary is unreliable. Fun. Sometimes interesting. In tests with real people – engaging. But inconsistent. Since we have no idea what it will say, we can’t debug it.

Imagine being an engineer building a bridge. Formulae tell you to use a certain amount of steel, or concrete, or rebar, or whatever it is engineers use to make sure it doesn’t go all Tacoma. One day it works. The next day it just doesn’t. No reason. Just random.

Now, maybe people who hang out in MIT hallways know this. Maybe they talk about. They may even have a term for it. But, I didn’t go to MIT. Sometimes, I can’t even spell it. I don’t know the name for this problem. So, in my non-engineering parlance, I going to say it this way, “The underlying foundations of AI have no tensile strength.” I don’t care if that makes sense. Consider it a hallucination.

Does AI have the tensile strength to support actual projects? No. It’s much worse than hallucinations. Even with the same inputs, the output is wildly inconsistent. You can’t build castles on AI’s sandy dunes.